In smart home systems, building facilities and networked appliances communicate and operate with the others to perform the home services. Generally, these services are invisible and contain a series of diverse functions handled by separated devices. In fact, smart home can be regarded as a ‘smarter’ version of home automation system by adding a contextaware ability. Ma et al. (2005) defines ‘smart space’ as a space that must have some kinds of levels of abilities of perception, cognition, analysis, reasoning and anticipation about a user’s existence and surroundings, on which it can accordingly take proper actions. In such an environment, computational intelligence can be regarded as being embedded into user’s environment, including the space around the users (Weiser, 1991), rather than into the individual devices. Depending on the level of context adaptation, a smart home may fully controls the environment automatically or lets the occupants run services and manipulate the space on their own.

In architectural practice, it has been realized that there is a considerable gap in the communication between architects and users which always brings about the failure in real design or built environment in which users do not satisfy and never expect. Some serious cases found after early occupancy need to be solved through retrofitting which is a common and costly process we (architects) try to obviate (Palmon et al., 2006). Architects who come up with design solutions fail to deliver their ideas to users completely. The problem usually stems from a fact that users cannot imagine how the design will be emerged after construction phase. Unlike architects, users are not trained and their comprehension in three-dimensional space is limited. Consequently, such problems will become more considerable in case of smart home where a lot of interconnected equipments and complicated services are installed. These complex and invisible services can lead to the difficulty in occupants’ role over the whole smart home life-cycle beginning from the design process to the occupancy stage. As any interactive home will be eventually used by end users, providing a method to enhance their participation and comprehension on how smart equipments and service will be installed as well as be operated will became major forthcoming issues in smart home industry. The efforts towards user-centered services can be found in a small number of projects such as Barkhuus and Day’s study of user acceptation to context-aware service (Barkhuus and Dey, 2003) as well as Leijdekkers and Gay’s user profile service (Leijdekkers and Gay, 2005). Nonetheless, there is no research which applies the user-centered approach to architectural design stage so far.

The goal of this paper is to propose a new framework which allows smart home designers and smart home users to collaborate. The designers can configure spatial interaction caused by context-aware services and let the users to experience the home services during the design stage. This can be regarded as an interface which connects the occupants to the smart sensing environment. To do so, a new integrated framework between Context-aware Building Data Model, Virtual Reality (VR) and web service is introduced in this paper. The new building data model is created base on Structured Floor Plan (Choi et al., 2007) to handle the interactivity and the complexity of smart home services. VR is applied to visualize invisible and pervasive sensing networks running in the background as well as providing an immersive environment for spatial interaction manipulation. Lastly, the web service technology is utilized to increase the system accessibility and to imply interconnectivity to smart home equipments. Therefore, this paper examines how to create and to implement virtual space using VR technique as a platform to simulate smart home service configuration.

In this paper, we propose a series of smart home platforms which enables home users to experience smart virtual place through the Internet. In particular, our interactive virtual place is different from conventional 3D space in that the created virtual place embodies spatial context-aware information including spatial relationship among entities, activities and users. Avatars controlled by users can explore and perform a set of related activities according to the current context resulting in the change and the interaction of virtual place. Consequently, the system can be used to simulate not only how space will look like but also how users interact with the smart environment based on predefined scenarios.

To achieve our goal, our research is conducted through following processes. First, similar and related systems are analyzed to indicate the research direction and the evaluation model. Second, essential elements to construct the virtual smart environment are extracted. Third, a novel place data model is constructed. After that, a series of smart home prototypes composed of ‘PlaceMaker’, ‘V-PlaceLab’ and ‘V-PlaceSims’ are developed based on the place data model. At the end, the overall processes to demonstrate how smart home designers and users can utilize the prototypes are discussed.

Related Works

To propose a new smart home framework, it is necessary to comprehend various related subjects including smart home environment, VR and behavioral research. This section describes state-of-the-art technology related and clarifies our research position developed with a different approach.

Smart Home Environment

According to Chen and Kotz (2000), context-aware services can be classified as passive or active. Active context-aware services are those that change their content autonomously on the basis of sensor data whereas passive context-aware services only present the updated context to the users and let them specify how the application should change. Likewise, smart home can also be categorized as passive smart home and active smart home depending on the services provided.

Passive smart homes which react to occupancy command are widespread whereas active smart homes, those demand interaction and invite guidance have not been vastly adopted in the housing market yet. Examples of active smart home are The Aware Home (Kidd et al., 1999), Gator Tech Smart Home (Hetal et al., 2005), Toyota Dream House PAPI (Sakamura, 2005) and NICT’s Ubiquitous Home (Minoh and Yamazaki, 2006). Accordingly, most active smart homes are found in R&D projects as it requires greater advanced and costly technology that cannot be commercialized at the moment. Nonetheless, the barrier of smart home application does not stem from only the cost problem. Indeed, the pervasiveness and the invisibility of devices and their working capacity also come with trade-offs.

For active smart home, The Aware Home (Kidd et al., 1999) is one of the first-generation laboratory houses for elderly developed at Georgia Institute of Technology. The research home was simultaneously inhabited by elderly people as well as tested and monitored by researchers. The research goal was to apply ubiquitous computing for everyday activities. Another similar project is Gator Tech Smart House (Hetal et al., 2005) developed by Mobile and Pervasive Computing Laboratory at University of Florida. With extensible technology based on OSGi framework, the goal of this context-aware home was to create an ‘off-theshelf’ smart house which the average user can buy, install, and monitor without the aid of engineers. Compared with The Aware Home, Gator Tech Smart House is more applianceoriented. Various smart functions for smart home appliances, home security system and home assistant service have been being developed. In Japan, the same movement in context aware home has been well recognized at Toyota Dream House Papi (Sakamura, 2005). The home has been developed under ‘TRON’ project, a long-term project since 1984 aimed at creating ideal computer architecture (http://tronweb.super-nova.co.jp). The main goals for the smart home were to design and to realize an environmentally friendly, energy saving intelligent house design in which the latest ubiquitous network computing technologies created by the ‘T-Engine’ project (Sakamura, 2006) could be tested and further developed. Another recent example of active smart home in Japan is Ubiquitous Home (Minoh and Yamazaki, 2006) developed at National Institute of Information and Communications Technology (NICT). Similar to The Aware Home and Gator Tech Smart House, families were invited to stay and test home services in the living laboratory. However, the home was applied with ‘Mother-Child’ metaphor having robots to take care of occupants. Unconscious type home robot controlled all services in the background where as visual type interface robots were used to communicate with the occupants.

Regardless of the different scopes and applications, common characteristics of above active smart homes have been noticed as follows; (1) Building components and networked appliances communicate and operate with the others to perform context-aware services. (2) Generally, smart services are invisible and contain a series of diverse functions handled by separate devices. (3) The home is capable of identifying and predicting its occupants’ actions by means of sensors and actuators then commit actions on behalf of them by means of Artificial Intelligence (AI). Considering these smart home cases, it is obvious that current research and development on smart home aims at creating the home capable of understanding its inhabitant as much as possible. However, this research argues that an opposite approach is more important and must be taken into account.

In addition, there are no current smart homes which can solely control the environment so far. Some smart home systems like NICT’s Ubiquitous Home (Minoh and Yamazaki, 2006) and LG HomNet (http://www.lghomnet.com) apply the concept of ‘Home Mode’ to operates all smart services according to the current mode. For example, a home may offer sleep mode, wake up mode, away mode, etc. In fact, the operation for each mode may vary from one user to the others. In other words, each user may have individual preferences on how the smart home should operate or be operated. Therefore, instead of letting the home understand the inhabitants, it is more important to acknowledge users on how the smart home can work and be operated at the moment.

Virtual Reality in Simulation

According to Weiss and Jessel (1998), one of the cardinal features of VR is the provision for a sense of actual presence in, and control over, the simulated environment. Simulation of spatial reality has a key role in order to duplicate the experience of real space (Oxman et al., 2004). VR platforms, therefore, have been extensively developed and exploited for simulating real space using virtual environment. In particular, under certain conditions such as occur when a task is more meaningful, interesting or competitive to the user, the level of presence is generally improved, even in the absence of high immersion (Nash et al., 2000). Moreover, Oxman and colleagues (2004) introduced three design paradigms to induce presence in virtual environment: task-based design, scenario-based design and performance-based design. In fact, such paradigms can be found in situation simulation games such as ‘The Sims2’ (Ma et al., 2005) in which each user performs ordinary tasks imitating the life in real world. The game playing depends on emotional and behavioral characteristics of multiple users through complex scenarios. Oxman’s paradigm, therefore, can explain why the level of presence in a situation simulation game is high enough to enable game players to immerge and to enjoy the interaction in virtual environment. Apart from these studies, a number of outstanding VR simulation platforms have been developed revealing the same tendency. FreeWalk/Q (Nakanishi and Ishida, 2004) developed at Kyoto University was a platform for supporting and simulating social interaction in Digital Kyoto City. Its goal was to integrate of diverse technologies related to virtual social interaction, e.g. virtual environments, visual simulations and lifelike characters (Prendinger and Ishizuka, 2004). In FreeWalk/Q, lifelike characters (referred to both avatar and agent) enable virtual collaborative event such as virtual meeting, virtual training, and virtual shopping in distributed virtual environments. Furthermore, the system utilized ‘Q’, an extension of a Lisp programming language called ‘Scheme’ as a scenario description language for describing interaction scenarios between avatars and agents. Unlike the research mentioned above which emphasizes user-user interaction or user-agent interaction, our approach focuses on the interaction between user and virtual space to enable context-aware services and functions as found in physical smart space.

Virtual Reality in Behavioral and Architectural Simulation

Meanwhile, there have been the attempts to study about human behavior in a certain kind of place using VR. Wei and Kalay (2005) developed a behavioral simulation platform embedded with usability-based building model. Their original building model created in DXF format is converted into scalable vector graphics (SVG) format then appended with non-graphical information. Such model enables virtual users as agents to perform specific behaviors autonomously for each spatial building entity. Our research also applies similar concept to this spatial building model. It is, nevertheless, developed upon Spatial Contextaware Building Data Model (Lertlakkhanakul et al., 2006). Another research by Palmon and colleagues (2006) introduced how a specific group of users such as people with disabilities can apply VR technology for a pre-occupancy evaluation. This project involved in the design of home environment before the construction phase. The system utilized an interaction with virtual environment verifying the ease of navigation and object usability using a joystick. However, the interaction level between space and users through their avatars was rather limited to collision detection and change in object attributes. Our research goal is also to create a spatial interaction management tool focusing on smart home environment. Hence, it requires concentrating on a higher level of human-space interaction in virtual environment.

Virtual Reality for Smart Environment

Recently, a new concept to combine two distinct paradigms called ‘Ubiquitous Virtual Reality’ (U-VR) has been introduced. According to Kim et al. (2006), VR focuses on the activities of a user in a Virtual Environment (VE) that is completely separated from a Real Environment (RE). On the other hand, Ubiquitous Computing (ubiComp) focuses on the activities of a user in a RE. Although VR and ubiComp reside in different realms, they have the same purpose, i.e. to maximize the human ability. Pfeiffer and partners (2005) presented a new method for remote access of virtual environments based on established video conferencing standards. A wide range of clients, from mobile devices to laptops or workstations, were supported enabling the virtual environments ubiquitously accessible. In addition, Kim and his colleagues (Kim et al., 2006) described and explored U-VR in a broader sense related to ubiComp. By supplementing the weaknesses of VR with the help of ubiComp, they looked for ways to evolve VR in ubiComp environments and purposed a demonstrated platform called Collaborative Wearable Mediated Attentive Reality. Nevertheless, our research is different from their research in that, the concept of U-VR is not applied to the interoperability in communication method and collaboration. Rather, it investigates how we can increase the usability of smart home context by means of VR.

The Building Data Model for Smart Home

In this paper, we explore how to create and to implement virtual space using VR technique as a platform to simulate smart home configuration. Due to the advancement of technology installed, smart homes require a novel simulation tool to help users realize designed smart home configuration before construction phase. Unfortunately, traditional CAD models possess only graphical/geometric information of design element (Wei and Kalay, 2005). They are lack of spatial information and other non-geometric information needed in order to create the smart virtual environment which can interact with virtual users.

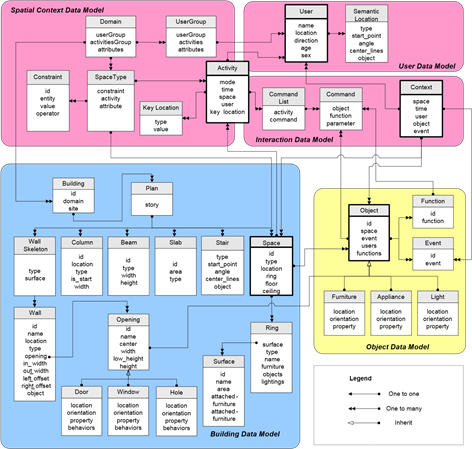

Fig. 1. Spatial Context-aware Place Data Model

At this stage, a method to initiate smart characteristic of virtual environment is needed to be investigated. According to our goal, human-space interaction plays a key role in the simulation. Besides, Ma (2005) defines ‘smart space’ as a space that must have some kinds of levels of abilities of perception, cognition, analysis, reasoning and anticipation about a user’s existence and surroundings, on which it can accordingly take proper actions. To be concise, the most basic characteristic of smartness is the context semantics and awareness for a real space or environment (Dey, 2001). Based on such definition, a novel data model called Spatial Context-aware Place Data Model has been defined. Unlike traditional CAD models, it embodies both geometry information and semantic information including spatial relationship among humans, objects and spaces. In particular, it enables the concept of virtual place. Places differ from mere spaces in that they embody social and cultural values in addition to spatial configurations. It is the concept of place, not space that connects architecture to its context and makes it responsive to given needs (Kalay, 2004). Figure 1 illustrates the structure of spatial context-aware place modeling. Its main components are described in this section.

Virtual Building Data Model

Built environment consists of a large number of design elements, such as walls, doors, windows, stairs, columns, etc. A virtual user needs to perceive and understand them to behave properly (Wei and Kalay, 2005). Furthermore, humans do not perceive architectural space as an image, but as a hierarchical composition of various elements (Lee et al., 2004). Therefore, our virtual building data model includes spatial information to explain the configuration and hierarchy of spatial components based on the concept of Structured Floor Plan (Choi et al., 2007). Spatial reasoning is the main advantageous feature of this model. It systematically enables virtual user to perceive and to recognize the space using hierarchical relationship and spatial connectivity among building components. From top-down approach, the member classes of the virtual building modeling range from ‘Building’ to ‘Surface’. Among them, ‘Space’ functions as a main interface class connected to other classes.

Virtual Object Data Model

Unlike traditional CAD models, our model does not only include building components but also objects including furniture, equipments and appliances. In addition, our model is capable of spatial context-awareness. Thus, smart objects also contain their own functions and status to interact with users and other objects. Such interaction is activated according to specific events performed by the virtual users in the same manner as occurred in the real world by means of sensors installed in smart objects. Each object must belong to at least one space (except doors and windows), enabling them to communicate with other entities.

Spatial Context Data Model

The modeling of spatial context handles additional non- geometric information attached to a space. It describes typical characteristics and spatial configuration for the built environment. ‘Domain’ stores spatial information of building type in the same manner as ‘Space Type’ does for space. Generally, domain and space type for each space are unique. For example, library and home (different domains) are so different in spatial configuration. They require disparate spaces, activities, area used by different types of user. Living room and dining room (different space types) need different furniture, temperature and location regardless of the same ‘home’ domain. In short, our spatial data model performs as a typical spatial knowledge base of any agent based system.

Virtual User Data Model

For the sake of offering appropriate services to each user in ubiquitous computing space, the system must be capable of storing and retrieving personal information precisely since each user may have unique characteristics and behaviors. Even a user can have dissimilar preferences under different situations. The personal preferences and needs, persons to interact with, and sets of devices to control by each individual, define one’s personal communication space (Arbanowski et al., 2001). Such personal information is stored in the Virtual User Data Model and handled by ‘User’ and ‘Activity’ classes.

Interaction Data Model

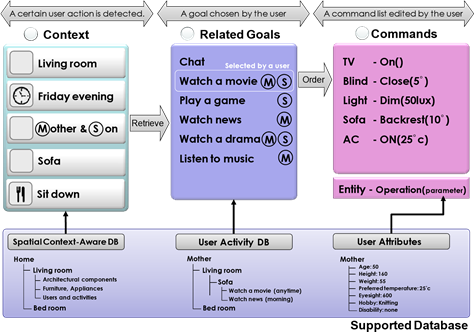

All potential interaction channels between a virtual user and the smart virtual environment are taken into account. Interaction in the virtual environment could take place by means of Interaction Data Model. It functions as an interface between the Virtual User Data Model and the others. In other words, it motivates the concept of human-centered service by applying context-aware ability. For example, a virtual user can perform specific actions with each object by the connection between ‘Event’ class stored in the Virtual Object Data Model and the User class. This enables a user to sit or lay on a bed for example. This linkage is created and handled by ‘Context’ class. Context is any information that can be used to characterize the situation of an entity (Ma et al., 2005). It serves as the key transaction and the initial status for any possible interactions by connecting all the components such as space, user, object, activity and event. Once a specific event performed by a user is detected, all related activities will be retrieved as the user’s potential goals. Each activity contains a set of commands for operating all related objects and services. More details on how interaction model works are explained at the end of this paper.

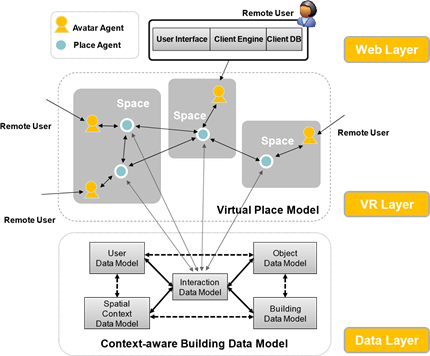

Virtual Smart Home Framework

In the previous section, the building data model for virtual smart home is introduced. It serves as the kernel to enable context-aware interaction. The system is capable of specifying who is doing what action at which area with which object for which purpose. In addition, the spatial network can be used to create location based service as well as enabling spatial reasoning. However, in order to create a virtual smart home environment for simulation, it requires developing the virtual environment itself with two uppers layers over the data model. Figure 2 shows the holistic framework of the virtual smart home. At the top level, the web layer connects the users to the virtual smart home model. In the intermediate level, agent layer interacts (reasoning) with user actions in the virtual environment and has access to the data model located at the bottom layer. The agent layer can be regarded as invisible robots or a smart home server in physical smart home cases. The process to create the virtual environment and the description of the two layers are discussed in this section.

Virtual Place Modeling Process

On the lowest layer, the overall mechanisms of the virtual architecture are motivated by means of the Building Data Model for smart home. Spatial Context-aware Data Model is capable of storing semantic information and spatial context for smart architecture apart from geometric data. The virtual place making process begins with using PlaceMaker, our spatial contextaware CAD modeling system, to design a virtual home by an architect according to the users’ preferences. Therefore, the output model contains both geometric and semantic spatial information including user and activity lists. The next step is to insert smart objects including their functions and events using a tool so called ‘V-PlaceLab’. Here, avatars are inserted as simulated users to create and test scenarios of spatial usability. The linkage between user activity and object operation is also applied at this stage. Finally, the smart home model is exported and embedded in a web page then uploaded into an online platform so called ‘VPlaceSims’. More details on PlaceMaker, V-PlaceLab, and V-PlaceSims can be found in Section 5. Once a user has registered and input his/her personal information, the entire virtual model is ready to be utilized. Figure 3 shows the virtual place making process.

Fig. 2. Virtual Smart Home Framework

Fig. 3. Virtual Place Making Process

Home and User Agents

A multi-user environment is created using client-server technology. The system model comprises three modules; client module, web application and server. A user interacts with other users and the virtual environment through user interface layer provided in a client browser. Avatar agent and place agent running in the web application layer sense the adhoc context and commit changes to the virtual world.

Our main computation processes for the agents are created based on UCVA Agent Model (Maher and Gu, 2002). Agents are defined as ‘Reactive’ agents reasoned response to an expected event in the virtual world. Their main processes are sensation, perception and action. Place agent takes care of context-aware reasoning including social event definition as well as spatial interaction control between users and the virtual space. It collects all context information for each space such as space type, number of user, current activity compared with social event definition to define current event for a single space. In contrast, avatar agent keeps handling user interaction. Each user has his or her own avatar agent. It senses the user’s action then transmits the data to the place agent and waits for the response.

Web Layer and Graphics User Interface

At the top level, web service with server-client topology is constructed to enable the online simulation. Users connect to the web server using HTTP protocol through the system homepage. On one hand, the dynamic WebPages are written in ASP script embedding ActiveX control (stage control in the interface) implemented using C++ language. The control engine can be automatically downloaded and installed on the first visit. The engine task is to handle user interaction and to render the virtual environment on the ActiveX control. The client side also includes texture library, avatar expression and motion database used for rendering purpose. On the other hands, the server locates the main computation engine where place and avatar agents are registered to update the virtual place context and gain connection to the database on the lower level. The ActiveX control works with other dynamic controls on the web pages to enable user actions and to display the updated virtual smart home environment using stream socket to synchronize clients with the server.

Virtual Smart Home Applications

Based on the virtual smart home framework described previously, we created a series of virtual smart home applications to realize the framework. The series includes PlaceMaker, V-PlaceLab and V-PlaceSims. Descriptions of each application are explained in this section.

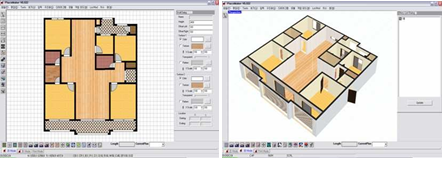

Place Maker

PlaceMaker is our modeling tool in which intelligent building model bound with the spatial context can be created. While creating an instance of building components in PlaceMaker, spatial context is automatically generated from library along with the geometric data. The system is designed to be capable of elaborating various kinds of building domain and space type. Buildings created within different domain contain different spatial characteristic and spatial context. Figure 4 shows the entire interface of PlaceMaker. There are three modes of visualization; Two-dimensional mode (2D mode), Three-dimensional mode (3D mode), and Print mode. Users can freely switch the visualization mode back and forth among all modes. By default, the 2D mode is set as the active mode. In this mode, users can create a space by drawing enclosed walls. A space can be composed of building components such as wall, opening, furniture as described in the Spatial Context-aware Place Data Model. All instances can be modified through their parameters in real-time manner with dialogs. Basic CAAD modeling and operation tools are also provided. Space type must be assigned to bind them with spatial context. By doing so, this enables various features including constraint-based design, automation in spatial network and procedural modeling. More information on PlaceMaker can be found in Lertlakkhanakul and colleagues (2006).

Fig. 4. The graphical user interface of PlaceMaker: 2D mode (left) and 3D mode (right)

V-PlaceLab

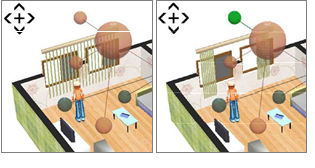

Not only spatial information, user information is also the main part to construct the virtual place embedding human-space interaction. Based on the Place Data Model, the next task is to include activities and their location in our model. Activity entity combines all information from user, space and location to form a place. In other words, it is possible to retrieve information about available activities, their locations and users which are useful for any context-ware or location based systems. To do so, V-Placelab (Lee et al., 2008) has been developed for this purpose. It is a simulation tool for smart home which enables designers to create scenario demonstrating how users can utilize the space. Scenario is created by setting the interaction among spaces, objects and human behaviors. Smart objects can be inserted into the model previously created by PlaceMaker. Each object not only contains geometry but also information of activity and its key location. For instance, sofa functions as an action point for watching TV and reading activities while bed is a location for sleeping. All information are merged and united into one virtual building. Each activity is associated to a home service and commands in the Interaction Data Model. With this interaction embedded in the virtual model, smart home designers can test the home functions by means of avatar control. Invisible services are represented with balloons over avatars. Figure 5 shows the screen shots of V-PlaceLab. Series of avatar actions can be recorded as scenarios and playedback as animation. The end result of virtual smart home is uploaded to V-PlaceSims Server for the collaboration between designers and smart home users.

Fig. 5. The graphical user interface of V-PlaceLab

V-PlaceSims

V-PlaceSims is developed as an online virtual environment platform for design collaboration between the project architect and the users. Our research emphasizes the collaboration process of smart home design. At first, a well-designed smart virtual home equipped with smart objects is provided by the architect. ActiveX technology embedded in Active Server Pages (ASP) format has been chosen to be the development platform as mentioned previously. After uploading the complete virtual model into our web server, users can login and control their avatars to explore the virtual smart home through their Internet connection. The users can navigate and interact with the virtual environment as thoroughly explained in the following section. The graphical user interface of V-PlaceSims can be divided into four main parts; Stage, Property panel, Command panel, and Action panel. Occupied the largest displayed area, ‘Stage’ illustrates the interactive virtual environment. It also includes a chatting textbox at the bottom and a dialog on the top for a communication. Located on the left area, ‘Property’ panel includes Status panel, Mode-setting panel, and Object Properties panel. A user can perform various functions including setting their information, preferences and preferred activities for each room as well as running a simulation. In addition, architects are privileged to modify the spatial configuration such as inserting, removing and moving objects. Located at the screen bottom, ‘Command’ panel displays how the virtual model interacts with users by showing the sequence of home networking commands. Lastly, ‘Action’ panel is located at the bottom showing available actions provided for each mode. Figure 6 illustrates a screenshot of V-PlaceSims.

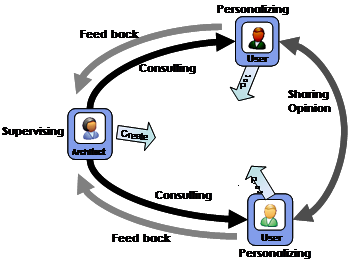

Within V-PlaceSims, multiple users can explore the space simultaneously as well as exchange their opinions through designated chatting textbox and utilize their (avatar’s) body expressions. Likewise, users can also receive assistance and send their feedback to the designer using the same communication channels. Moreover, users can interact with the smart environment and also possess a certain permission level to manage smart service configuration. Figure 7 shows the holistic view of collaboration model in V-PlaceSims.

Fig. 6. A screenshot of V-PlaceSims

Fig. 7. Collaboration model in V-PlaceSims

Demonstration

V-PlaceSims provides a set of functions divided into five modes. They are Avatar, Activity, Security, Space-editing, and Simulation modes. Users can set the current mode using navigation controls located in the Mode-setting panel. Details of each mode are summarized as follows; (1) At ‘Avatar’ mode, users can choose their avatar, edit their preferences and profiles such as favorite TV programs, water temperature for shower, favorite song for morning call, room temperature for sleeping, and so on. (2) At ‘Activity’ mode, users can specify what type of activity they would like to do for each room. The activities are grouped according to the home mode; such as sleeping, cooking, and going-out modes. (3) At ‘Security’ mode, users can modify their account information such as username and password. The system administrator can also edit the user permission in this mode. (4) At ‘Space-editing’ mode, users are capable of adding, transforming (move and rotate) or deleting furniture as well as setting room textures. Thus, the furniture layout can be freely design by the users. (5) At ‘Simulation’ mode, users can change to this mode after completing the setting mode. The purpose of this mode is to let the designer or the users customize how the home components and appliances can be used according to the users’ needs as each user may have personal preferences in living style. This can enable, for example, how services in a bedroom including illumination system, air-conditioning system and audio system can function together to support a user when going to bed for sleeping mode (e.g. as the user lays down on the bed at night). Here, the designer can acknowledge the users on how the smart objects can be operated or let them decide how they want to user the space on their own.

Fig. 8. A typical spatial interaction scenario

Following content demonstrates how V-PlaceSims is utilized by users through a scenario as shown in Figure 8. A mother and her child enter the virtual living room (through their avatars) on Friday evening. As a smart home designer, the architect guides the users to sit on a sofa located in that room. As soon as they sit on the sofa, the sitting event activates smart interaction processes. This procedure has been set by the architect in advance. Then the system searches for all related activities from users’ personal information. All of them are displayed on the stage right after they have sat on the sofa. Note that, only the main user set by the architect has right to select his/her preferred activity when there are many users activate the same event at a single time. Then, the mother as the main user selects an activity as her goal. After that, the system lists up all designated object commands according to the chosen goals. Each object command contains both functions and parameters. The parameters are also automatically defined based on the user’s personal information such as preferred room temperature, lighting illumination and color, music volume, and so on. Once the mother has seen a command list, she can also rearrange the order as well as remove and add new command in the panel. After fixing all commands, she can run the simulation and see the result changing in the virtual environment setting. Example screenshots of the simulation are shown in Figure 9. Currently, the replacement of furniture model (e.g. to replace an opened curtain with a closed one), the use of geometry representation of smart service (spheres and lines over objects) and balloon message are being used to response with users and shown in Figure 9 and 10.

A B C

Fig. 9. Screenshots of V-PlaceSims: (A) Space Edit Mode, (B) and (C) Simulation Mode

Fig. 10. Visualization of smart home services by replacing objects and using network of spheres

Conclusion

In this paper, a new virtual place framework has been proposed to enhance the collaboration between smart home designers and end users. The framework incorporates a range of processes beginning at defining a generic data model following by the development of virtual place applications including PlaceMaker, V-PlaceLab and V-PlaceSims. New interactive characteristics of building data model have been introduced. In designers’ point of view, our framework offers a new method to create virtual smart home model embedded with human-space interaction and service information. Building models created by PlaceMaker can be placed with smart objects holding activity information. With VPlaceSims, a smart home user can control his/her avatar to interact with smart environment in such a manner that players enjoy 3D computer games. The interaction refers to a sequence of smart object commands evoked by certain user actions. On the contrary to agent-based simulation (no ad-hoc user interaction), our approach uses data input by actual users as avatars’ actions and behaviors in virtual place. This leads to the paradigm shift in user role from a passive listener to an active actor. In addition, group collaboration is also possible in which users can give their feedbacks and update the spatial interaction instantly.

By means of the Context-aware Building Data Model, human-space interaction which is vital for simulating smart home functions and services is realized in the virtual environment. Together with VR, the platform is capable of visualizing invisible services, performing real-time interactions with the home and acknowledging the users how it can be configured and operated according to their individual needs. In addition, the web-based service increases the system accessibility and usability as users can log-in from anywhere to collaborate with each others. Because smart home is extremely difficult for users to gain insight of smart service configuration, our research position is to express a user-oriented approach by applying the concept of U-VR to smart home simulation model. The long term goal is to avoid considerable gap between the architect and the user in smart home design process. After all, our framework can deliver users the better comprehension in how smart and interactive virtual architectural models designed by architects will be constructed and utilized. Eventually, it is expected that the research will lead to the decline in design failures and problems in built environment during building occupancy period.